galore: memory-efficient llm training by gradient low-rank projection|galore gradient low rank : Bacolod Instead of focusing just on engineering and system efforts to reduce memory consumption, we went back to fundamentals. We looked at the slow-changing low-rank structure of .

webJulho 5, 2021. ¡Haz clic para puntuar esta entrada! Minha Cunhada, é uma história em quadrinhos distribuída pela toomics, que tem 29 episódios. Onde ler a história em .

0 · low rank gradient training

1 · gradient lowest rank projection

2 · galore vs lora

3 · galore memory efficient training

4 · galore low rank training

5 · galore huggingface

6 · galore gradient low rank

7 · More

8 · 8 bit galore llm training

O empréstimo pessoal DigioGrana possui algumas condições interessantes para o consumidor. Ele pode ser parcelado em até 24 vezes, com . Ver mais

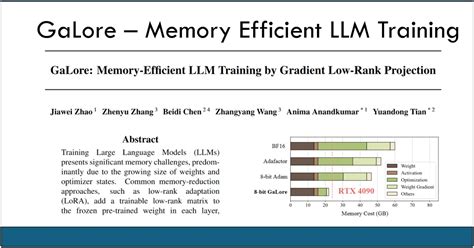

galore: memory-efficient llm training by gradient low-rank projection*******A paper that proposes a novel training strategy for large language models (LLMs) that reduces memory usage by up to 82.5% without sacrificing performance. .GaLore is a training strategy that reduces memory usage by projecting the gradient of the weight matrix to a low-rank subspace, while allowing full-parameter learning. It .Gradient Low-Rank Projection (GaLore) is a memory-efficient low-rank training strategy that allows full-parameter learning but is more memory-efficient than common . Their method. The authors of GaLore propose to apply low-rank approximation to the gradients instead of weights, reducing memory usage similarly to .

This work proposes GaLore, a training strategy that allows full-parameter learning but is more memory-efficient than common low-rank adaptation methods .Instead of focusing just on engineering and system efforts to reduce memory consumption, we went back to fundamentals. We looked at the slow-changing low-rank structure of .

By using GaLore with a rank of 512, the memory footprint is reduced by up to 62.5%, on top of the memory savings from using 8-bit Adam or Adafactor optimizer. This paper proposes a memory-efficient training method called GaLore (Gradient Low-Rank Projection) for large language models (LLMs). GaLore aims to .

GaLore is a memory-efficient training strategy for large language models (LLMs) that leverages the low-rank structure of gradients. It projects the gradient matrix .

GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection works (e.g., it is a matrix with specific structures), proving many of its properties (e.g., Lemma3.1, . Their method. The authors of GaLore propose to apply low-rank approximation to the gradients instead of weights, reducing memory usage similarly to LoRA as well as opening the door to pretraining on consumer hardware. The authors prove that the gradients become low-rank during training, with a slowly evolving projection . GaLore is a memory-efficient training strategy for large language models (LLMs) that leverages the low-rank structure of gradients. It projects the gradient matrix into a low-rank subspace using projection matrices P and Q, reducing memory usage for optimizer states.In this work, we propose Gradient Low-Rank Projection (GaLore), a training strategy that allows full-parameter learning but is more memory-efficient than common low-rank adaptation methods such as LoRA. Our approach reduces memory usage by up to 65.5% in optimizer states while maintaining both efficiency and performance for pre-training . In this work, we propose Gradient Low-Rank Projection (GaLore), a training strategy that allows full-parameter learning but is more memory-efficient than common low-rank adaptation methods such as LoRA. Our approach reduces memory usage by up to 65.5% in optimizer states while maintaining both efficiency and .In this work, we propose Gradient Low-Rank Projection (GaLore), a training strategy that allows full-parameter learning but is more memory-efficient than common low-rank adaptation methods such as LoRA. Our approach reduces memory usage by up to 65.5% in optimizer states while maintaining both efficiency and performance for pre-training . Their method. The authors of GaLore propose to apply low-rank approximation to the gradients instead of weights, reducing memory usage similarly to LoRA as well as opening the door to pretraining on consumer hardware. The authors prove that the gradients become low-rank during training, with a slowly evolving projection .

By using GaLore with a rank of 512, the memory footprint is reduced by up to 62.5%, on top of the memory savings from using 8-bit Adam or Adafactor optimizer.galore: memory-efficient llm training by gradient low-rank projection galore gradient low rank By using GaLore with a rank of 512, the memory footprint is reduced by up to 62.5%, on top of the memory savings from using 8-bit Adam or Adafactor optimizer.GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection works (e.g., it is a matrix with specific structures), proving many of its properties (e.g., Lemma3.1, Theorem3.2, and Theorem3.6). In contrast, traditional PGD mostly treats the objective as a general blackbox nonlinear function, and study the gradients in the vector space only.A team of researchers has developed a memory-efficient training strategy called GaLore for large language models (LLMs). LLMs are models that can understand and generate human language. Training these models requires a lot of memory, which is expensive and consumes a lot of energy. GaLore reduces the memory usage by up to 65.5% while .galore: memory-efficient llm training by gradient low-rank projection GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection (arxiv.org) GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection. (. arxiv.org. ) 5 points by victormustar 10 .galore gradient low rank GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection (arxiv.org) GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection. (. arxiv.org. ) 5 points by victormustar 10 .GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection. Training Large Language Models (LLMs) presents significant memory challenges, predominantly due to the growing size of weights and optimizer states. Common memory-reduction approaches, such as low-rank adaptation (LoRA), add a trainable low-rank matrix to . In this work, we propose Gradient Low-Rank Projection (GaLore), a training strategy that allows full-parameter learning but is more memory-efficient than common low-rank adaptation methods such as LoRA. Our approach reduces memory usage by up to 65.5% in optimizer states while maintaining both efficiency and .

WEBAnger management should not attempt to deny a person’s anger. Anger is a protective emotion. But it often functions to protect a fragile ego, which may involve guilt , shame, and anxiety.

galore: memory-efficient llm training by gradient low-rank projection|galore gradient low rank